CORE CONCEPT OF MACHINE LEARNING

Core concept of Machine Learning : it s a way to teach computers to learn and make decisions without being explicitly programmed. This means that instead of telling a computer what to do in every possible situation, we can give it lots of examples of what we want it to do, and it can learn on its own how to do it.

Machine learning can be used for many different tasks, such as predicting sales, identifying spam emails, or translating languages. It’s a very powerful tool that can save time and increase accuracy in many areas of work and research.

SUPERVISED LEARNING

Supervised learning is a type of machine learning where the computer model is trained on labeled data. Labeled data means that the input data is already tagged with the correct output or target value.

For example, let’s say we want to train a machine learning model to predict whether a given email is spam or not. In supervised learning, we would start with a dataset of emails that have already been labeled as spam or not spam. This labeled dataset would be used to train the model to recognize patterns in the input data that are associated with spam emails.

During the training process, the model learns from the labeled data by adjusting its internal parameters to minimize the difference between its predicted output and the correct output. Once the model has been trained on the labeled data, it can be used to make predictions on new, unseen data.

Supervised learning is a widely used approach in machine learning and can be used for a variety of tasks, such as image classification, speech recognition, and predicting housing prices. One advantage of supervised learning is that it allows the model to learn from pre-existing data, rather than starting from scratch, which can save a lot of time and effort in model development.

UNSUPERVISED LEARNING

Unsupervised learning is a type of machine learning where the computer model is trained on unlabeled data. Unlike supervised learning, there are no predefined target variables or labels associated with the input data.

Instead, the unsupervised learning model must identify patterns, relationships, and structures in the data on its own. The model looks for similarities and differences among the input data and tries to group similar data points together.

For example, if we had a dataset of customer transactions with no labels, an unsupervised learning model might cluster the data into groups of customers with similar buying behaviors. This could help a business identify segments of customers with similar needs and preferences.

Unsupervised learning is often used for exploratory data analysis, clustering, and anomaly detection. It can be useful in cases where we don’t have labeled data or when we want to discover new insights or hidden patterns in the data. However, because unsupervised learning doesn’t have predefined labels, it can be more challenging to evaluate and validate the performance of the model.

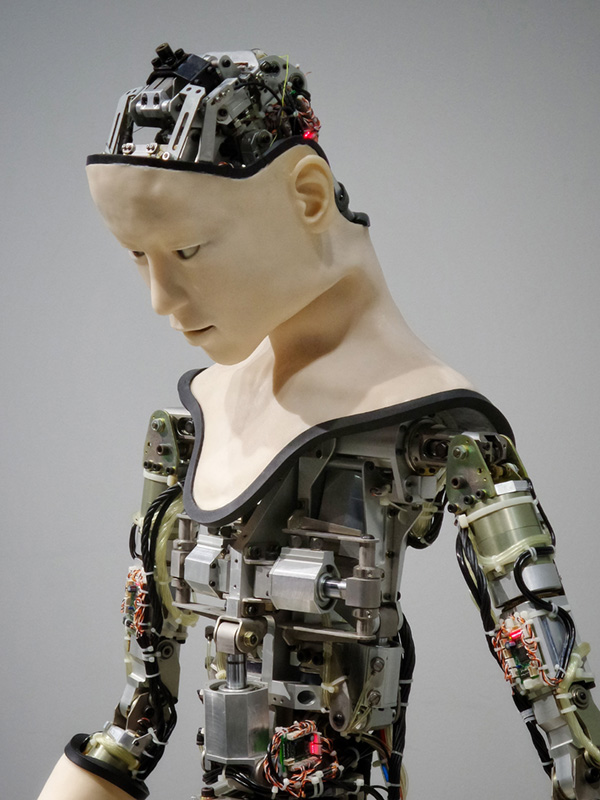

NEURAL NETWORKS

Neural networks are a type of machine learning algorithm that are designed to mimic the structure and function of the human brain. They are used for a variety of tasks, such as image recognition, natural language processing, and speech recognition.

Neural networks consist of layers of interconnected nodes, called neurons, which process information and communicate with each other. Each neuron takes input from other neurons, applies a mathematical function to that input, and produces an output that is sent to other neurons in the network.

The neural network learns by adjusting the weights and biases of the connections between the neurons during the training process. This involves feeding the network a large dataset of input and output examples and adjusting the weights and biases of the neurons to minimize the error between the predicted output and the actual output.

Once the network has been trained, it can be used to make predictions on new, unseen data. The input data is fed into the network, and the network produces an output that represents the predicted value or class.

Neural networks have shown remarkable performance in a variety of applications and are one of the most widely used machine learning algorithms. They can be used for both supervised and unsupervised learning, and their ability to learn from large amounts of data makes them well-suited for complex problems that are difficult to solve using traditional rule-based programming.

DEEP LEARNING

Deep learning is a subfield of machine learning that uses neural networks with many layers (hence the name “deep”) to learn complex representations of data. Deep learning models are capable of learning from large amounts of data and can be used for a wide range of applications, such as image recognition, speech recognition, and natural language processing.

The key advantage of deep learning is its ability to automatically learn hierarchical representations of data. Each layer of the neural network learns to identify increasingly complex features and patterns, building on the information learned in the previous layer. This allows the model to learn very high-level abstractions of the input data, which can be used to make predictions or decisions with a high degree of accuracy.

For example, in image recognition, a deep learning model can learn to identify basic features such as edges and corners in the first layer, then build on those features to recognize more complex shapes and patterns in the subsequent layers. By the final layer, the model has learned to recognize the overall object in the image with a high level of accuracy.

Deep learning requires large amounts of data and computational power to train the models, but advances in technology have made it increasingly accessible and applicable to a wide range of domains. Deep learning has led to breakthroughs in many fields, such as autonomous driving, medical diagnosis, and natural language processing.

FEATURE ENGINEERING

Feature engineering is the process of selecting and transforming raw data into features that can be used by machine learning algorithms to make predictions or decisions. It involves identifying the most relevant features or variables in the data and creating new features that capture important relationships and patterns.

Feature engineering is a critical step in the machine learning process because the performance of the model is heavily dependent on the quality and relevance of the features used. Good feature engineering can help improve the accuracy and efficiency of the model, while poor feature engineering can lead to inaccurate or unreliable predictions.

There are several techniques used in feature engineering, including:

- Feature selection: Choosing the most relevant features from the available data. This can involve using statistical methods or domain knowledge to identify the features that are most important for the task at hand.

- Feature transformation: Transforming the features into a more useful representation. This can involve scaling, normalizing, or encoding the data in a way that makes it more suitable for the machine learning algorithm.

- Feature creation: Creating new features by combining or modifying existing features. This can involve creating interaction terms, engineering time-based features, or applying domain-specific knowledge to create new features.

Overall, feature engineering is an iterative process that requires domain knowledge, creativity, and careful evaluation to ensure that the features selected and engineered are relevant and useful for the machine learning task.

MODEL EVALUATION

Model evaluation is the process of assessing the performance of a machine learning model on a specific task. The goal of model evaluation is to determine how well the model is able to make accurate predictions or decisions based on new, unseen data.

There are several methods for evaluating machine learning models, including:

- Holdout method: Splitting the available data into training and testing sets, where the training set is used to train the model and the testing set is used to evaluate its performance.

- Cross-validation: Dividing the data into multiple folds, where each fold is used as a testing set while the remaining folds are used as a training set. This allows for a more robust evaluation of the model’s performance.

- Metrics: Using specific performance metrics to evaluate the model’s accuracy, precision, recall, F1 score, and other relevant measures based on the specific task.

- Visualization: Plotting the model’s predictions against the actual outcomes to visualize the model’s performance and identify areas for improvement.

The choice of evaluation method and metrics depends on the specific task and the type of model being evaluated. It is important to use appropriate evaluation methods to avoid overfitting or underfitting the model to the data.

Overall, model evaluation is a critical step in the machine learning process that allows for the assessment of the model’s ability to generalize to new data and make accurate predictions or decisions.

BIAS AND FAIRNESS

Bias and fairness are important considerations in machine learning that relate to the potential for models to perpetuate or exacerbate social or cultural biases.

Bias in machine learning refers to the tendency of models to produce inaccurate or unfair results due to the limitations of the data used to train them. For example, if a model is trained on data that is biased against certain groups, such as women or people of color, the model may perpetuate that bias by producing inaccurate or unfair results for those groups.

Fairness in machine learning refers to the goal of ensuring that models produce unbiased and fair results for all individuals or groups, regardless of their race, gender, or other demographic factors. This involves identifying potential sources of bias in the data and model and implementing measures to address them, such as reweighting the data or adjusting the model’s parameters.

There are several techniques and tools available to mitigate bias and ensure fairness in machine learning, such as:

- Fairness metrics: Using metrics to measure and quantify the fairness of the model’s predictions, such as the disparate impact, demographic parity, or equalized odds.

- Data preprocessing: Preprocessing the data to remove or mitigate sources of bias, such as by oversampling underrepresented groups or using data augmentation techniques.

- Model adjustments: Adjusting the model’s parameters or structure to mitigate bias and ensure fairness, such as by using regularization or modifying the loss function.

- Algorithmic transparency: Providing transparency into the model’s decision-making process to allow for the identification and correction of potential biases.

Overall, ensuring fairness and mitigating bias in machine learning is a critical step in ensuring that the models are accurate, reliable, and fair for all individuals and groups.